Gordon E. Moore, a co-founder and former chairman of Intel Corporation, the California semiconductor chip maker that helped give Silicon Valley its name, achieving the kind of industrial dominance once held by the giant American railroad or steel companies of another age, died on Friday at his home in Hawaii. He was 94.

His death was announced by Intel and the Gordon and Betty Moore Foundation. No cause was specified.

Moore could claim credit for bringing laptop computers to hundreds of millions of people and embedding microprocessors into everything from bathroom scales, toasters and toy fire engines to cellphones, cars and jets.

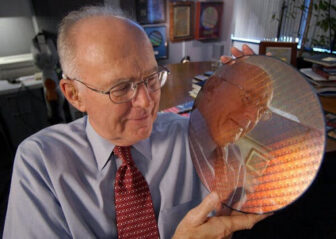

Moore holding a silicon wafer in 2005. Silicon was a key to Intel’s success.

Credit...Paul Sakuma/Associated Press

Moore had wanted to be a teacher but could not get a job in education. He later called himself an “accidental entrepreneur, because he became a billionaire as a result of an initial $500 investment in the fledgling microchip business, which turned electronics into one of the world’s largest industries.

And it was he, his colleagues said, who saw the future. In 1965, in what became known as Moore’s Law, he predicted that the number of transistors that could be placed on a silicon chip would double at regular intervals for the foreseeable future, thus increasing the data-processing power of computers exponentially.

He added two corollaries later: The evolving technology would make computers more and more expensive to build, yet consumers would be charged less and less for them because so many would be sold. Moore’s Law held up for decades.

Through a combination of Moore’s brilliance, leadership, charisma and contacts, as well as that of his partner and Intel co-founder, Robert Noyce, the two assembled a group widely regarded as among the boldest and most creative technicians of the high-tech age.

This was the group that advocated the use of the thumbnail-thin chips of silicon, a highly polished, chemically treated sandy substance — one of the most common natural resources on earth — because of what turned out to be silicon’s amazing hospitality in housing smaller and smaller electronic circuitry that could work at higher and higher speeds.

With its silicon microprocessors, the brains of a computer, Intel enabled American manufacturers in the mid-1980s to regain the lead in the vast computer data-processing field from their formidable Japanese competitors. By the ’90s, Intel had placed its microprocessors in 80 percent of the computers that were being made worldwide, becoming the most successful semiconductor company in history.

Much of this happened under Moore’s watch. He was chief executive from 1975 to 1987, when Andrew Grove succeeded him, and remained chairman until 1997.

As his wealth grew, Moore also became a major figure in philanthropy. In 2001, he and his wife created the Gordon and Betty Moore Foundation with a donation of 175 million Intel shares. In 2001, they donated $600 million to the California Institute of Technology, the largest single gift to an institution of higher learning at the time. The foundation’s assets currently exceed $8 billion, and it has given away more than $5 billion since its founding.

In interviews, Moore was characteristically humble about his achievements, particularly the technical advances that Moore’s Law made possible.

“What I could see was that semiconductor devices were the way electronics were going to become cheap. That was the message I was trying to get across,” he told the journalist Michael Malone in 2000. “It turned out to be an amazingly precise prediction — a lot more precise than I ever imagined it would be.”

Not only was Moore predicting that electronics would become much cheaper over time, as the industry shifted from away from discrete transistors and tubes to silicon microchips; over the years his prediction proved so reliable that technology firms based their product strategy on the assumption that Moore’s Law would hold.

“Any business doing rational multiyear planning had to assume this rate of change or else get steamrolled,” said Harry Saal, a longtime Silicon Valley entrepreneur.

Arthur Rock, an early investor in Intel and friend of Moore’s, said: “That’s his legacy. It’s not Intel. It’s not the Moore Foundation. It’s that phrase: Moore’s Law.”

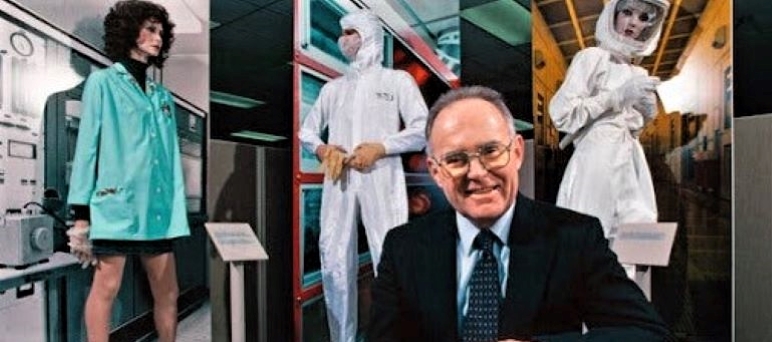

Moore during Intel’s early days. A few years earlier, he had predicted that the number of transistors that could be placed on a silicon chip would double at regular intervals, which became known as Moore’s Law.

Gordon Earl Moore was born on Jan. 3, 1929, in San Francisco. He grew up in Pescadero, a small coastal town south of San Francisco, where his father, Walter, was deputy sheriff and the family of his mother, Florence Almira (Williamson) Moore, ran the general store.

Moore enrolled at San Jose State College (now San José State University), where he met Betty Whitaker, a journalism student. They married in 1950. That same year, he completed his undergraduate studies at the University of California, Berkeley, with a degree in chemistry. In 1954, he received his doctorate, also in chemistry, from Caltech.

One of the first jobs he applied for was as a manager with Dow Chemical. “They sent me to a psychologist to see how this would fit,” Moore wrote in Engineering & Science magazine in 1994. “The psychologist said I was OK technically but I’d never manage anything.”

So Moore took a position with the Applied Physics Laboratory at Johns Hopkins University in Baltimore. Then, looking for a way back to California, he interviewed at Lawrence Livermore Laboratory in Livermore, Calif. He was offered a job, he wrote, “but I decided I didn’t want to take spectra of exploding nuclear bombs, so I turned it down.”

Instead, in 1956, Moore joined William Shockley, the inventor of the transistor, to work at a West Coast division of Bell Laboratories, a start-up unit whose aim was to make a cheap silicon transistor.

But the company, Shockley Semiconductor, foundered under Shockley, who had no experience running a company. In 1957, Moore and Noyce joined a group of defectors who came to be known as “the traitorous eight.” With each putting in $500, along with $1.3 million in backing from the aircraft pioneer Sherman Fairchild, the eight men left to form the Fairchild Semiconductor Corporation, which became a pioneer in manufacturing integrated circuits.

Bitten by the entrepreneurial bug, Moore and Noyce decided in 1968 to form their own company, focusing on semiconductor memory. They wrote what Moore described as a “very general” business plan.

“It said we were going to work with silicon,” he said in 1994, “and make interesting products.”

Their vague proposal notwithstanding, they had no trouble finding financial backing.

With $2.5 million in capital (the equivalent of about $22 million today), Moore and Noyce called their start-up Integrated Electronics Corporation, a name they later shortened to Intel. The third employee was Grove, a young Hungarian immigrant who had worked under Moore at Fairchild.

After some indecision around what technology to focus on, the three men settled on a new version of MOS (metal oxide semiconductor) technology called silicon-gate MOS. To improve a transistor’s speed and density, they used silicon instead of aluminum.

“Fortunately, very much by luck, we had hit on a technology that had just the right degree of difficulty for a successful start-up,” Moore wrote. “This was how Intel began.”

In the early 1970s, Intel’s 4000 series “computer on a chip” began the revolution in personal computers — although Intel itself missed the opportunity to manufacture a PC, for which Moore partly blamed his own shortsightedness.

“Long before Apple, one of our engineers came to me with the suggestion that Intel ought to build a computer for the home,” he recalled. “And I asked him, ‘What the heck would anyone want a computer for in his home?’”

Still, he saw the future. In 1963, while at Fairchild as director of research and development, Moore contributed a chapter to a book that described what was to become the precursor to his eponymous law, without the explicit numerical prediction. Two years later, he published an article in Electronics, a widely circulated trade magazine, titled “Cramming More Components Onto Integrated Circuits.”

“The article presented the same argument as the book chapter, with the addition of this explicitly numerical prediction,” said David Brock, a co-author of “Moore’s Law: The Life of Gordon Moore, Silicon Valley’s Quiet Revolutionary” (2015).

There is little evidence that many people read the article when it was published, Brock said.

“He kept giving talks with these charts and plots, and people started using his slides and reproducing his graphs,” Brock said. “Then people saw the phenomenon happen. Silicon microchips got more complex, and their cost went down.”

In the 1960s, when Moore began in electronics, a single silicon transistor sold for $150. Later, $10 would buy more than 100 million transistors. Moore once wrote that if cars advanced as quickly as computers, “they would get 100,000 miles to the gallon and it would be cheaper to buy a Rolls-Royce than park it. (Cars would also be a half an inch long.)”

Moore’s survivors include his wife; two sons, Kenneth and Steven; and four grandchildren.

In 2014, Forbes estimated Moore’s net worth at $7 billion. Yet he remained unprepossessing throughout his life, preferring tattered shirts and khakis to tailored suits. He shopped at Costco and kept a collection of fly lures and fishing reels on his office desk.

Moore’s Law is bound to reach its end, as engineers encounter some basic physical limits, as well as the extreme cost of building factories to achieve the next level of miniaturization. And in recent years, the pace of miniaturization has slowed.

Moore himself commented from time to time on the inevitable end of Moore’s Law. “It can’t continue forever,” he said in a 2005 interview with Techworld magazine. “The nature of exponentials is that you push them out and eventually disaster happens.”

Holcomb B. Noble, a former science editor for The Times, died in 2017. Katie Hafner is a former staff reporter for The New York Times. Copyright, The New York Times, 2023.